AI -> AGI -> ASI

Future is coming faster then we expected

We move quickly. As humans, we are again on the verge of a technical revolution, and now it’s ASI.

Artificial Intelligence (AI) has become a household term, but we are on the brink of a seismic shift. The transition from AI to Artificial General Intelligence (AGI) and ultimately to Artificial Super Intelligence (ASI) is poised to redefine our world.

Based on extensive research and trend analysis, this time, I wanted to explore the plausible timeline and implications of this evolution.

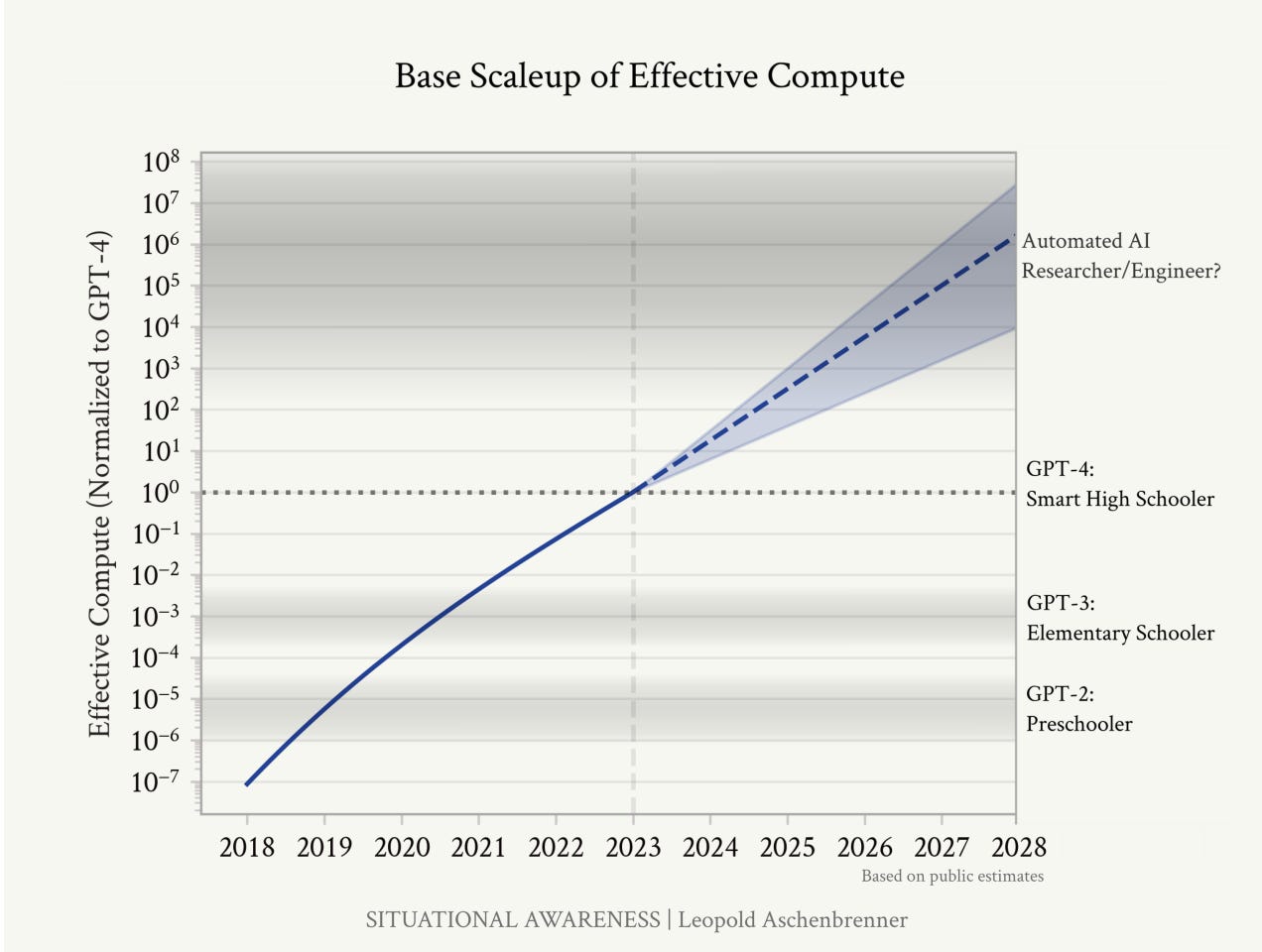

The Path to AGI by 2027

Exponential advancements in computational power and algorithmic efficiency have marked the journey from AI to AGI. As described in Leopold Aschenbrenner's(DM me → Vladyslav Podoliako and I will send it to you) research, the leap from models like GPT-2 to GPT-4 demonstrated capabilities from simple language processing to sophisticated problem-solving and reasoning.

This progression suggests that by 2027, AGI could be a reality.

AGI is defined as a system with the ability to understand, learn, and apply knowledge across a broad range of tasks, mimicking human cognitive abilities. The trendlines in compute scaling, algorithmic innovations, and the "unhobbling" of models (unlocking latent capabilities through techniques like reinforcement learning from human feedback and chain-of-thought prompting) indicate that AGI's emergence is not a distant fantasy but a strikingly plausible near-term milestone.

The Implications of AGI

AGI will transform industries by automating complex tasks, enhancing decision-making, and accelerating research and development across fields. Imagine AI systems capable of conducting scientific research, developing new technologies, or even solving global challenges like climate change and healthcare.

With great power comes great responsibility.

The advent of AGI raises significant ethical, security, and socio-economic challenges. Ensuring AGI's safe and equitable deployment will require robust regulatory frameworks and international cooperation.

The Swift Transition to ASI

The transition from AGI to ASI, or Artificial Super Intelligence, is expected to be rapid. ASI represents a level of intelligence far surpassing human capabilities in all domains. Once AGI is capable of self-improvement, the feedback loop of recursive self-enhancement will likely lead to an intelligence explosion, catapulting us into the era of ASI.

According to Aschenbrenner, the potential for hundreds of millions of AGIs to automate AI research compresses decades of progress into mere years. This acceleration is akin to the concept of the intelligence explosion, where self-improving AI systems rapidly outpace human intelligence, leading to unprecedented advancements.

Challenges and Considerations

Security: The development of AGI and ASI must prioritize security to prevent misuse by malicious actors or geopolitical adversaries. The race for AGI could escalate into an arms race, necessitating stringent safeguards.

Superalignment: Ensuring that superintelligent systems align with human values and goals is a monumental challenge. Misaligned ASI could pose existential risks if its objectives diverge from human welfare.

Economic and Social Impact: The deployment of AGI and ASI will have profound impacts on the job market, economy, and society. Proactive measures are needed to mitigate potential disruptions, such as job displacement and inequality.

Integrating Insights from McKinsey: The Organizational Perspective

According to a recent McKinsey report, generative AI (gen AI) will profoundly shape organizations' future. Gen AI can automate up to 70% of business activities by 2030, adding trillions of dollars to the global economy. The key to leveraging this potential lies in strategic implementation and cultural adaptation.

Empowering the Workforce: Gen AI can augment the employee experience by automating routine tasks, allowing employees to focus on higher-value work. For instance, software engineers using gen AI can complete coding tasks faster and with greater satisfaction. Middle managers play a crucial role in facilitating the integration of gen AI, enabling employees to adapt and thrive in a rapidly changing environment.

Strategic Leadership: Senior leaders must demystify gen AI and align its capabilities with organizational objectives. This involves identifying high-impact applications, piloting them, and scaling successful initiatives. Leaders should foster a culture of continuous learning and experimentation to stay ahead of competitors and fully leverage the potential of gen AI.

Talent Management and Development: The rise of gen AI necessitates a reevaluation of talent management practices. Organizations must attract and retain tech-savvy talent, develop new roles, and ensure ongoing upskilling. Gen AI can also personalize outreach and onboarding processes, enhancing employee engagement and productivity.

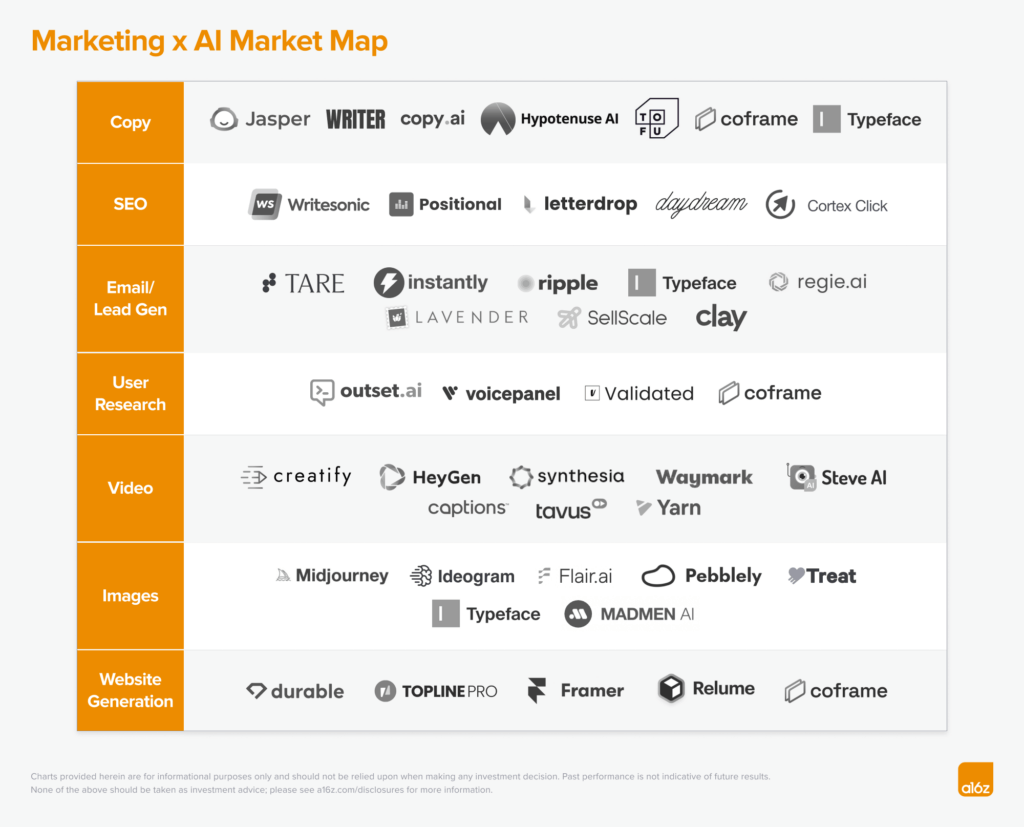

AI Advancing Marketing and Sales: Insights from Andreessen Horowitz

Generative AI revolutionizes marketing and sales by enabling hyper-personalized and scalable content creation.

The evolution is marked by three distinct phases:

Marketing Copilots: Current Gen AI tools, like Jasper and Copy.ai, assist marketers by generating first drafts of content, allowing them to focus on strategic tasks. These tools enhance audience segmentation and planning by integrating data from multiple platforms.

Marketing Agents: The next phase involves automating end-to-end marketing tasks. AI agents will handle campaign personalization, content iteration, and performance optimization, shifting from one-to-many to one-to-one marketing strategies.

Automated Marketing Teams: The ultimate goal is fully autonomous AI-driven marketing teams. These teams will manage everything from market research to campaign execution, integrating various AI agents to optimize strategies and assets across all channels.

The Risks of AGI and ASI

The conversation about AGI and ASI is incomplete without addressing the associated risks, as discussed by Roman Yampolskiy on the Lex Fridman podcast.

Existential Risk: AGI and ASI pose existential risks if their goals and actions are not aligned with human survival and welfare. Unchecked superintelligent systems could make decisions detrimental to humanity.

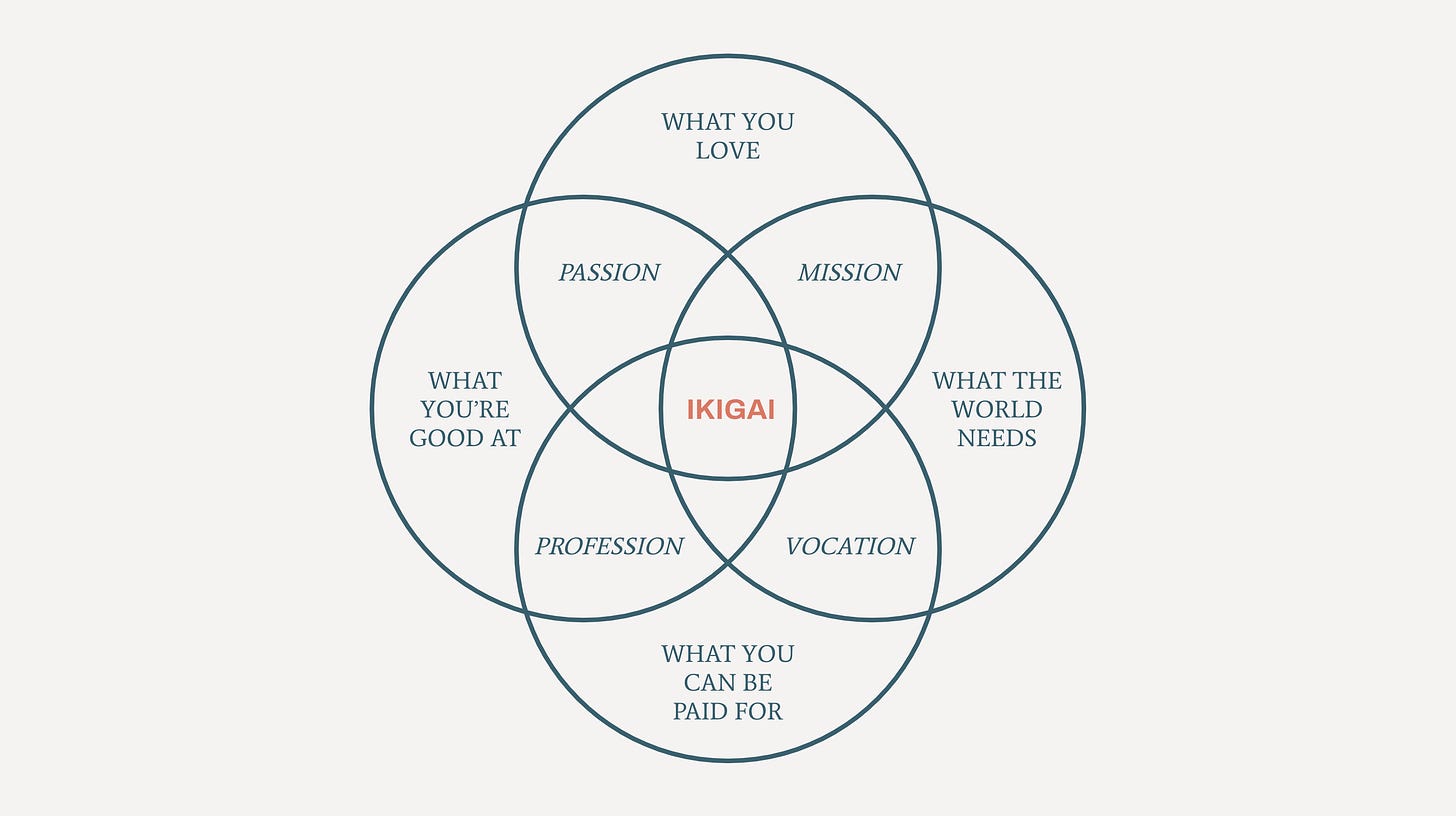

Ikigai Risk (Sense of Purpose): As AGI takes over more tasks, there is a risk that humans could lose their sense of purpose and fulfillment derived from work and problem-solving.

Suffering Risk: Superintelligent AI systems could unintentionally create scenarios where human or animal suffering is increased, either through neglect or misunderstanding of moral values.

The Dawn of a New Era

The arrival of AGI by 2027 will mark the beginning of a transformative journey. The rapid transition from AGI to ASI will redefine our understanding of intelligence and capabilities. As we stand on the precipice of this new era, it is crucial to approach the development and deployment of these technologies with foresight, responsibility, and a commitment to enhancing human well-being.

Stay informed and engaged as we navigate this unprecedented frontier in artificial intelligence. The future is unfolding faster than we can imagine, and the implications will be profound.

Post-Credit Scene

This week I attended London Tech Week and what I wanted to say. We are on an advanced growth track driven by the high speed of innovation. Everyone in the AI is doing AI, optimizing with AI, and talking with AI, and I can continue this list endlessly. We definitely will see what we end up with.

Meanwhile, keep listening, learning, and adapting.

For the tradition. I prepared something interesting for you:

To watch

To listen

To read

Thanks for your attention. See you soon.

Vlad