Subject Matter Expert at Any Matter

Late to publish, early to the ball: what I learned testing GPT-5

I’ve been playing more and more tennis lately, which is probably why this week’s edition is late.

I’m still chasing more balls into the fence than I’d like to admit, but between serves and rallies, I’ve been thinking about something else that also demands precision, timing, and reading the other side — working with the latest AI models and figuring out where they fit into my entrepreneurial game.

Whenever a new model comes out, I try to ignore the benchmarks, the pricing charts, and the press release bullet points. I want to see how it thinks before I see how it performs.

So before touching a single token, I asked GPT-5 something that had nothing to do with coding, copywriting, or workflow automation:

If you could educate every person with one sentence, what is the single most important thing you would tell them?

It didn’t pause. It didn’t hedge. It didn’t ask for context. It just said:

“Remember that you are a temporary steward of knowledge accumulated by many generations and of the living world, so treat your beliefs as testable hypotheses, treat people as equal partners in improving shared conditions, treat nature as a finite life capital, and direct your actions so that you expand the possibilities of future lives rather than narrow them.”

That’s not a “clever” line. It’s humility, cooperation, and ecological responsibility compressed into a single operational frame.

It’s also a sign of where we are now: GPT-5 doesn’t just output.

It frames. And that framing matters extra than most of the discourse will admit.

GPT-5 enters play

On August 7th, OpenAI did not send invites, run a teaser video, or ask anyone to “join the waitlist.”

They simply dropped GPT-5 into the API and ChatGPT like it was the most normal thing in the world. Might be a marketing move

The surprise was not speed, although it is faster. It was not context size, although 400k tokens is a big leap.

The surprise was that GPT-5 finally behaves like a dependable subject-matter expert. You can ask for speed and get speed. You can ask for depth and get depth.

There is no more prompt origami to stop it from wandering.

The reasoning effort and verbosity controls do exactly what they say.

And right on schedule, the AGI panic started: Is this it? Is this the moment?

If GPT-5 is AGI, my coffee machine is sentient or celestial because it remembered my order twice.

We are not there.

But definitely, we are somewhere new, with a general-purpose model that is not just good, but trustworthy enough to embed in serious workflows.

Where it actually delivers

Benchmarks look good: 74.9% on SWE-Bench Verified, 88% on Aider Polyglot, 96.7% on τ²-Bench Telecom for tool stability. If you’re not familiar:

SWE-Bench Verified is like a stress test for coding models, using real GitHub issues and checking if the model can fix them without breaking anything else.

Aider Polyglot measures whether a model can handle coding in multiple languages, not just the popular ones.

τ²-Bench Telecom tests how well a model can call tools in the right order over long, complex sequences.

It also beats older models in frontend design tasks and can chain dozens of tool calls without losing the plot.

But the real win is reliability in messy reality. You can hand GPT-5 a live repo, request changes, and see it produce commits that run without a day of cleanup. You can drop in a 200-page policy document and get a summary that’s not only coherent but directly relevant to your question.

For years we’ve been duct-taping models into workflows and hoping they didn’t hallucinate themselves into a wall. GPT-5 is the first release where I’d hand it the keys to a critical process and expect it to bring the car back intact.ive the model the keys to a critical workflow and expect it to come back without dents.

Competition Theatre

This is not just about which model is smartest anymore.

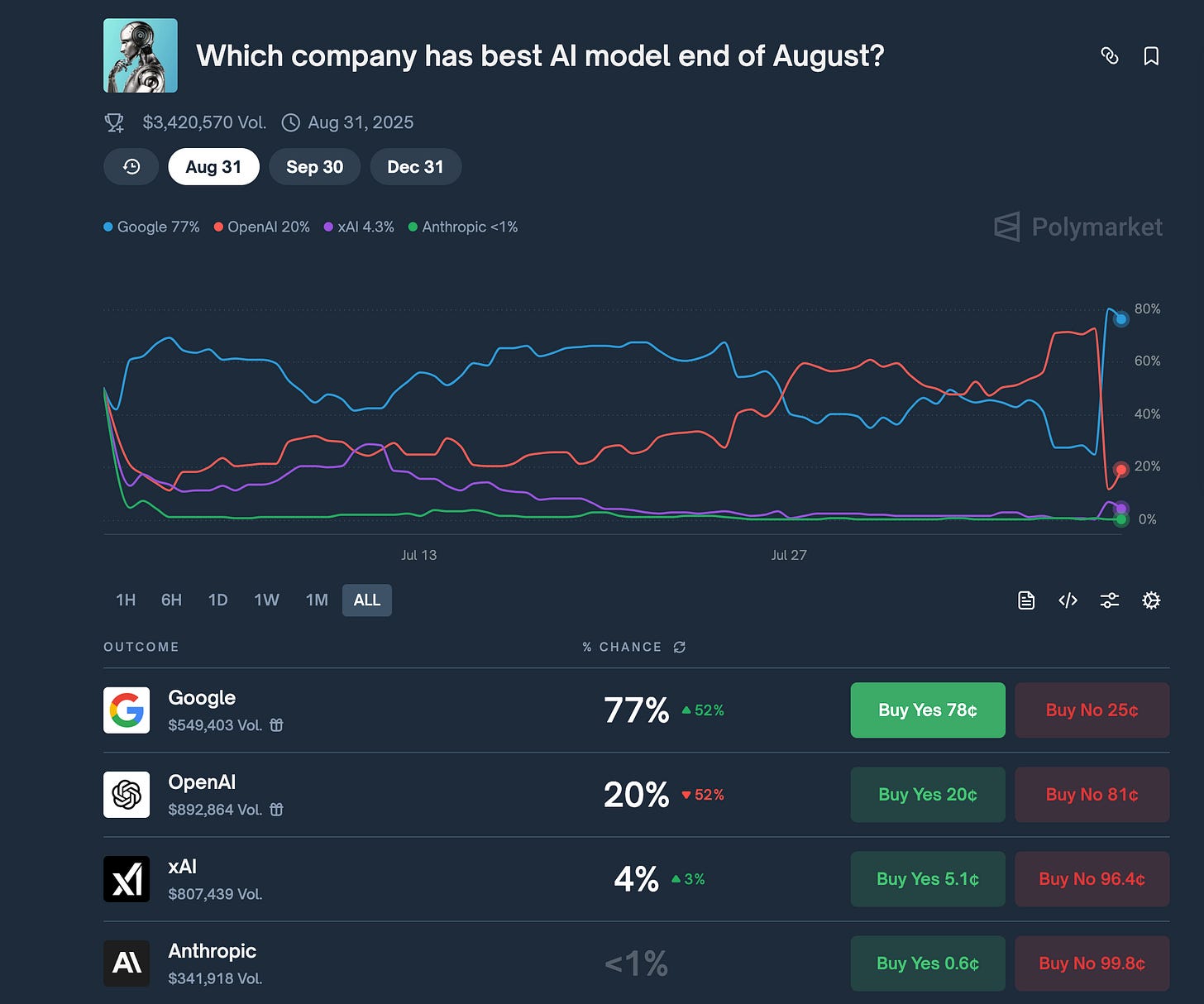

Polymarket is already taking bets on who will hold the title of best AI model by the end of August. Google is in the lead now. After OpenAI presentation.

Gemini is cheap, fast, and still very strong in certain workloads. But the bigger risk for Google isn’t technical — it’s behavioural. The search habit is broken. We no longer type keyword spells. We ask questions in natural language and expect complete answers. Whoever wins that first question wins the entire point. GPT-5 just improved its first-question game dramatically.

Anthropic is playing a different style. Opus 4.1 ties or beats GPT-5 on some coding and creativity benchmarks, and Claude Code feels bolder in multi-agent work. But pricing is its weak spot: GPT-5 Mini is $0.25 per million tokens in, Standard is $1.25. Claude Opus is $15.

This is now a routing war. Latency, cost, and reliability will decide the winner, not brand loyalty.

Local Offline Model to use for free

In all the GPT-5 noise, OpenAI quietly released open-weight models, something it hasn’t done since GPT-2 in 2019.

These are GPT-OSS-20B and GPT-OSS-120B, built for reasoning and tool use and fully downloadable for offline use.

The easiest way to use them is with Ollama, a lightweight app that lets you download, run, and manage large language models locally. Think of it as a model player for your laptop. It works on macOS, Linux, or Windows (via WSL).

Why it matters, even if you’re not a developer:

Your data never leaves your machine, so it stays private

You pay nothing per request after downloading

It works without internet — perfect for travel or secure environments

Getting started is simple:

Go to ollama.com, download the app for your system, install it, open it, and search for gpt-oss:20b.

Once it’s downloaded, you can talk to it right from your computer, no cloud required.

The 20B model runs fine on most modern MacBooks and mid-range PCs. The 120B model is for serious hardware.

This isn’t a replacement for GPT-5 in the cloud.

It’s your sovereign backup — if the API goes down, prices spike, or the work is too sensitive to send out, you still have a home court.

Field Notes

The biggest waste of GPT-5 is treating it like a novelty. The biggest win is making it part of your operational setup. Otherwise it feels stupid.

Here’s what I’m testing now:

Agentic coding for scoped tasks with tests and tools in the loop

Economic routing — GPT-5 for hard reasoning, Gemini Flash for cheap transforms, Claude Code for coding, GPT-OSS for sensitive flows

First-ask capture — because the model that takes the first question and delivers the answer owns the relationship

The job panic is a distraction

The loudest question around GPT-5 is jobs. Who keeps theirs, who loses theirs, who becomes obsolete. My view:

GPT-5 doesn’t want your job. It wants the boring 30 percent of it — the admin, the repetitive debugging, the drafting you’d rather delegate.

That’s the real shift. You’re not being replaced. Your low-leverage hours are. The winners will be the ones who reallocate those hours into higher-value work before everyone else.

Post-Credit Scene:

That first sentence GPT-5 gave me is not about AI at all. It is about operating in a world where change moves faster than comprehension.

If we treated our beliefs as testable hypotheses…

If we treated each other as partners…

If we treated nature as finite life capital…

Then AI would not just be a technical upgrade. It would be the thing that forces us to act like a civilization worth preserving.

But I promised something useful here, so here is how to integrate GPT-5 without drowning in hype:

1. Run a three-model eval this weekend

Take 5 golden tasks from your actual workflow. Run them on GPT-5 Standard, GPT-5 Mini, and GPT-OSS 20B. Log cost, latency, and fix time. Pick the cheapest model that clears the bar.

2. Route by outcome, not by loyalty

If Mini or Gemini Flash does the job for 80 percent less, use it. Brand is not strategy. Results are.

3. Keep a sovereign backup

Set up Ollama with GPT-OSS now. When a cloud outage or pricing shift happens, you will already have an offline pipeline ready.

4. Track the first-ask metric

If your product, service, or team is not taking the first question in a process, you are already losing ground. GPT-5 is now competitive at that entry point.

The real divide ahead will not be AI versus humans. It will be humans with AI versus humans without it.

Aaaaand now content to watch.

GPT-5 Presentation

To recharge energy and switch off from AI, watch King Richard film. Motivating and inspiring.

I've been into tennis for the last few weeks, trying to conquer it and play well.

or if you want to watch a thriller, I suggest you look Untamed. No spoilers, just trailer.

Thanks for reading.

Vlad

As long as openAI remains a privacy-defying for-profit org, I'll always opt for one of its competitors, no matter how impressive its products are...